When we think of elder abuse, we often think of caregivers physically abusing patients in their care. However, elder abuse includes other forms of exploitation as well. Financial exploitation of elderly people seems to be among the fastest-growing fraud types. While I have been engaged with AARP and other organizations that raise awareness of elder abuse, I don’t think I fully realized the extent of it until I recently browsed my mother’s Facebook page.

I don’t spend much time on Facebook, but I was appalled by what I recently discovered. Not just because of the rampant catfishing but also because of the seeming indifference of Facebook to this specific challenge. While Facebook does offer Threat Exchange for free to all platforms, facilitating cross-platform sharing and taking down over 1 Billion fake accounts per quarter, this has not stopped Meta properties from facilitating over 70% of fraud for some very large banks.

Facebook has certainly taken steps to manage catfishing, but my recent experiences show that there are huge gaps in Facebook’s attempts to mitigate this crime. I will share what I have learned in the hopes it can further raise awareness of this crime while potentially prompting Facebook to review and improve its policies toward protecting legitimate platform participants.

The other thing that bothers me about what I am preparing to share is that high-ranking members of our military are also being exploited by these crimes. Their photos, bios, names, experiences…their full identities are being exploited as the “unwitting victims” of impersonation. This is a fight Army Colonel David Blackmon has taken up since he learned his likeness was being used to perpetuate these crimes. Many service members don’t even know they’re being impersonated. These represent many accounts using the same exact information down to the names and images.

What I Discovered

I noticed a post that my mother made recently that had an obvious catfishing comment on it. I could see that the image had an American flag in the background, so I clicked through, fully aware that it was a catfishing account. So, I reported the account to Facebook, checked a few other recent posts, and found several more impersonators, all catfishing my mother’s posts. Take a look:

All of these “posters” have profiles linked to high-ranking military members. There were several more that were not military as well. Every single one of these comments is a prompt for my mom to send a friend request. With the now pervasive use of large language models, these types of comments are a trend and can be easily identified. It is clear that Facebook has yet to address this issue, so at least I can report them to Facebook.

Reporting Fraud to Facebook

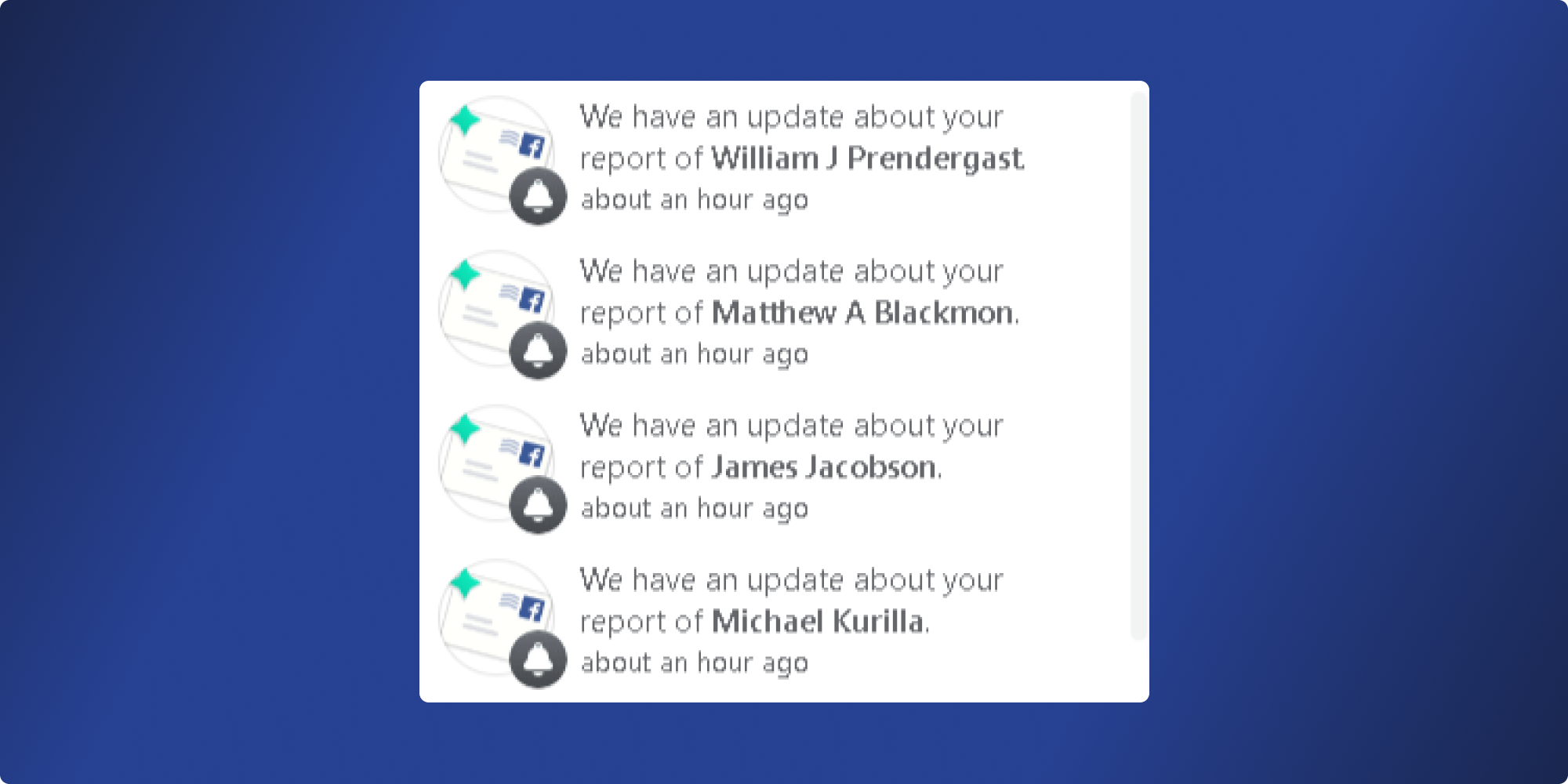

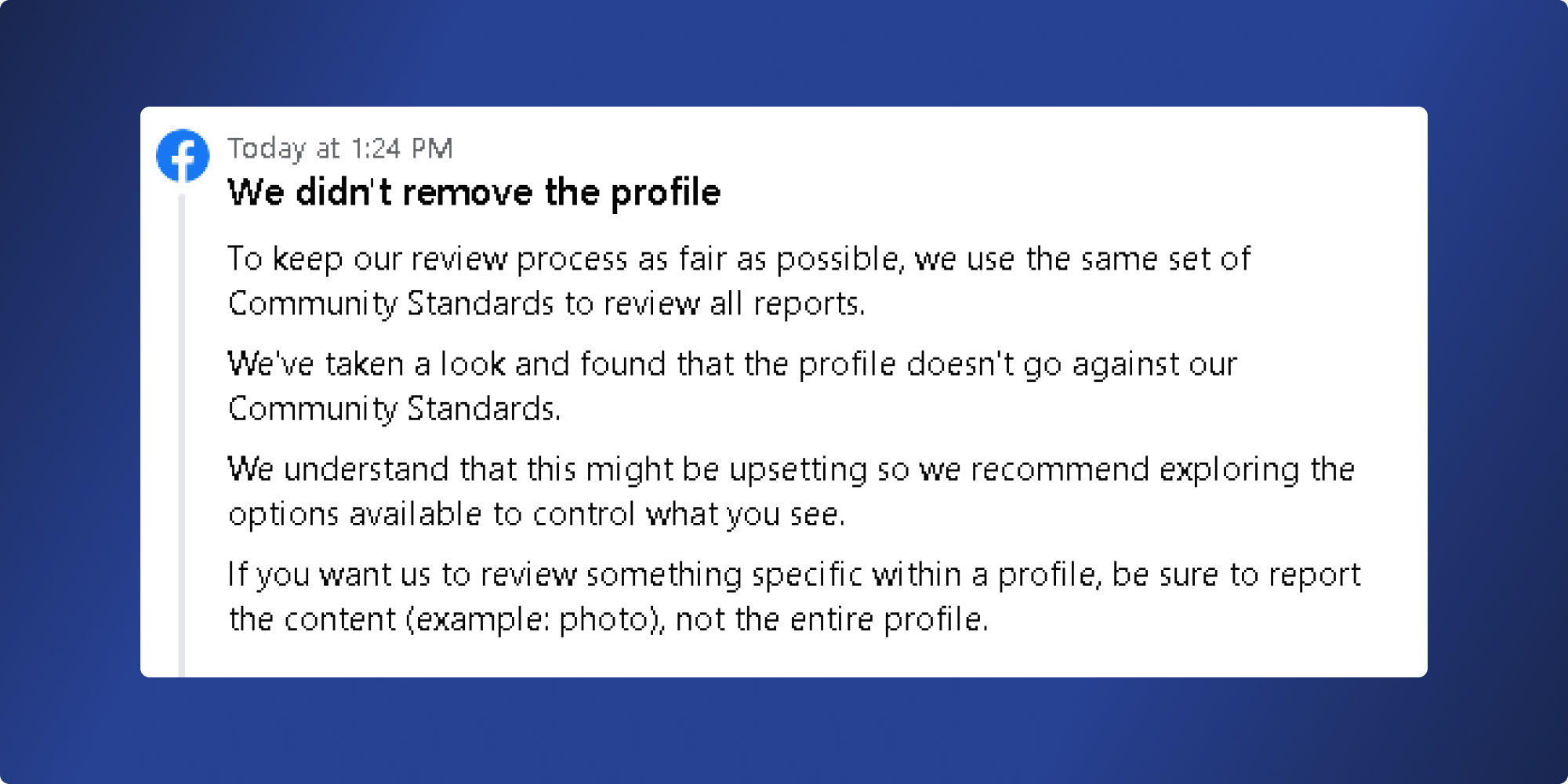

Well, you can report them. But it seems their process is not currently effective in removing them quickly. Here are the responses I received from Facebook:

Within each report of an impersonation account, I received the following response from Facebook:

While I’m sure repeated reports are tracked, Facebook did nothing to remove the profile based on my feedback, enabling these threat actors to continue perpetrating their scams.

Duplicate Accounts

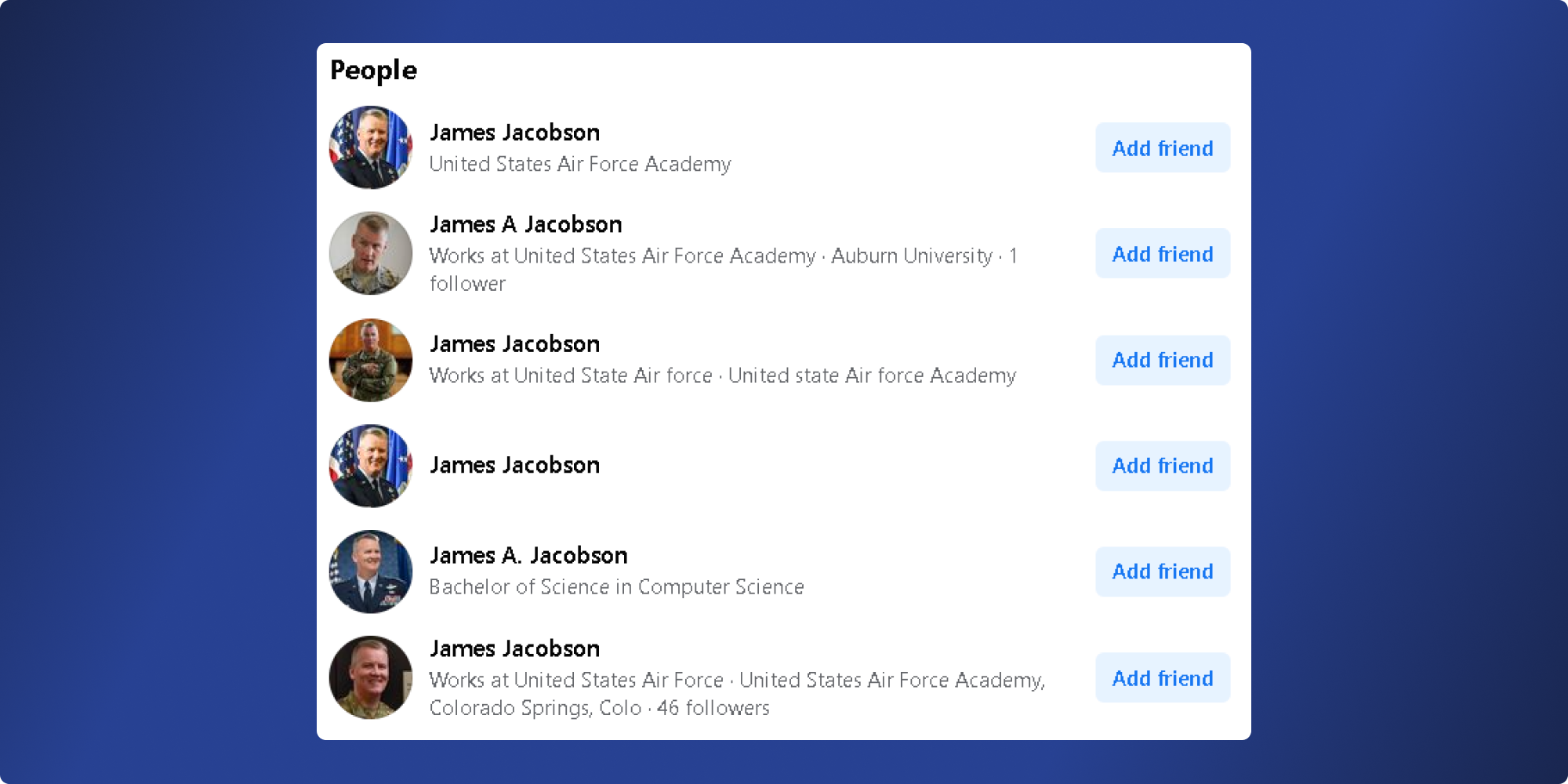

After seeing Facebook’s response, I became curious whether Facebook is identifying duplicate accounts. Let’s see what happens if I search for James Jacobson:

This is what I found. Every profile listed under this name is representing itself to be the same person. Each of these is fake, depicting the same victim of likeness theft. I wonder if General Jacobson knows the extent to which his image and profile are being exploited to perpetrate catfishing fraud targeting the elderly. Whatever Facebook is doing to prevent this issue is not working. Perhaps the fraudsters are creating these accounts faster than Facebook can take them down.

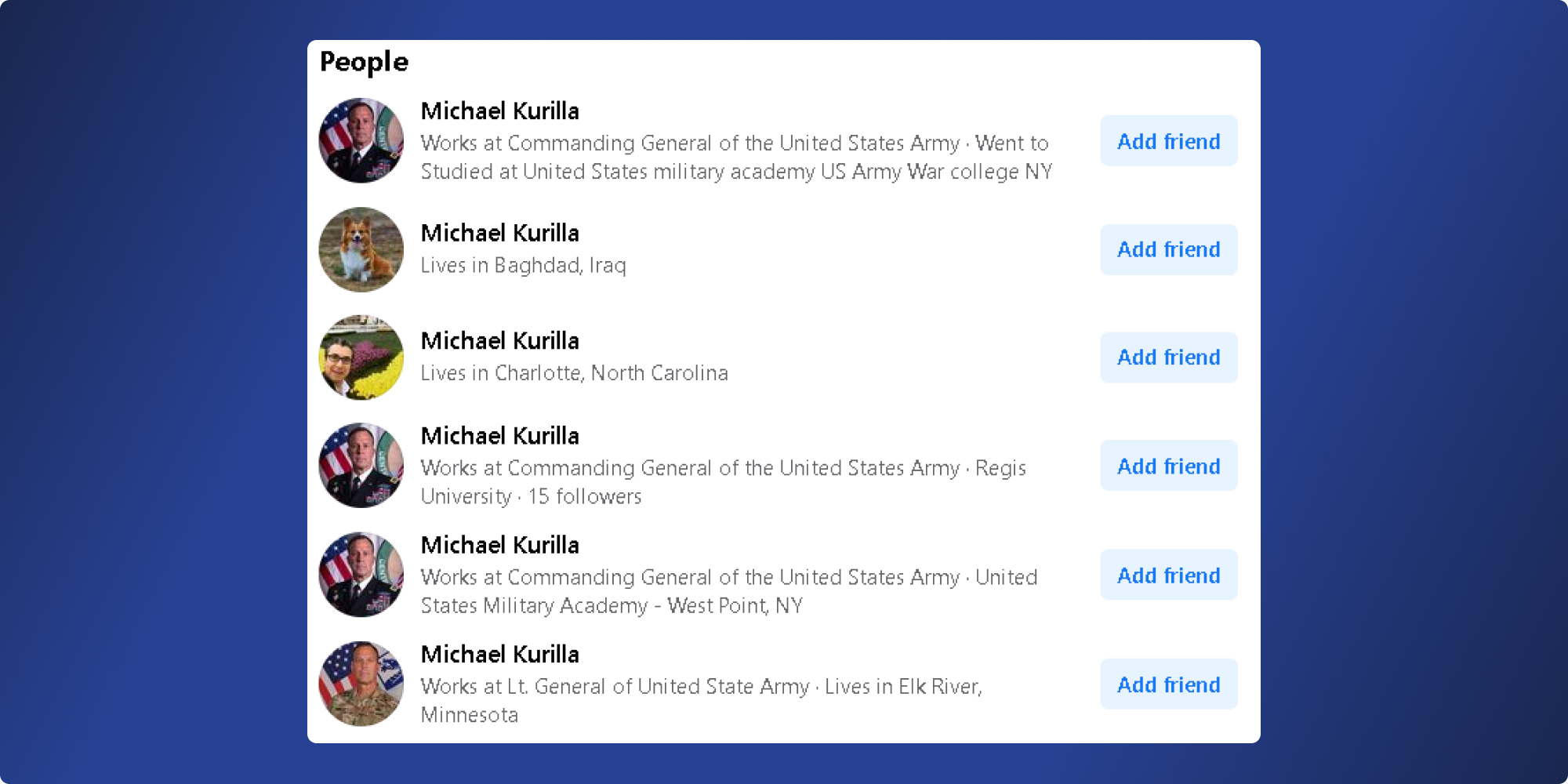

I then reviewed another profile that targeted my mother’s account. Michael Kurilla? Same story:

This is just the first page of profiles matching “Michale Kurilla.” There are many more fake accounts attributed to this military leader. The same issue appears to hold true for William Pendergast. The only exception on this list is Matthew Blackmon.

What next?

By writing this article, I hope Facebook sees this. I hope they do right by these military leaders and the elderly in our community. More importantly, I hope they start instituting more solutions to detect this type of fraud on their platform. In the game of cat and mouse between fraud fighters and fraudsters, the volume of fraudulent accounts is overwhelming the means to prevent them.

Luckily, my mother is smart enough to ignore these posts. But the numbers suggest that the fraudsters behind these accounts are wildly successful. To the tune of hundreds of millions of dollars per year, maybe billions…it’s hard to say as this is considered a widely under-reported crime.

We can also educate those in our networks to identify these scams and protect themselves.

How Catfishing Scams Work

Catfishing scams typically involve a few common elements:

- Fake Profiles: Scammers create elaborate online profiles with attractive photos and compelling personal stories. These profiles may appear genuine and trustworthy.

- Building Trust: Once the fake profile is established, scammers initiate contact with potential victims, often on social media, dating apps, or online communities. They engage in conversations, establish rapport, and build emotional connections.

- Manipulative Tactics: As trust deepens, scammers may begin asking for money, personal information, or compromising photos. They often fabricate stories of personal crises or emergencies to exploit their victims’ empathy and generosity.

- Revealing the Truth: Sometimes, the scammer’s true identity is never exposed, leaving victims emotionally devastated and financially drained. However, some victims do eventually uncover the deception, leading to emotional turmoil and often legal action.

How to Protect Yourself from Catfishing Scams

- Stay Skeptical: Be cautious when interacting with strangers online, especially if they seem too good to be true. Remember that anyone can create a fake profile.

- Verify Identities: If unsure about someone’s authenticity, use reverse image searches to check if their photos appear elsewhere on the internet. Genuine individuals will not hesitate to provide additional verification. If you think you already connected with someone previously and receive a new request, be suspicious and ensure that someone hasn’t created a duplicate account from someone you already know and trust.

- Guard Personal Information: Avoid sharing personal or sensitive information with people you’ve met online. Be wary of anyone who pressures you to reveal such details.

- Be Cautious with Finances: Never send money or financial information to someone you’ve only met online, no matter how compelling their story may be.

- Trust Your Instincts: If something feels off or suspicious, trust your gut feeling. Cut off contact and report the profile if necessary.

- Communicate Openly: Talk to friends and family about your online interactions. They can provide valuable insights and support if you suspect you’re being catfished.

- Report Scammers: Most social media platforms and dating apps have reporting mechanisms to flag suspicious accounts. Use these features to protect others from falling victim to the same scam. Even if they fall on deaf ears, perhaps that may be changing.

Catfishing scams are a sobering reminder of the risks associated with online interactions. By staying vigilant, verifying identities, and following safety guidelines, you can reduce your vulnerability to these deceptive schemes. Remember that genuine connections can be found online, but it’s essential to exercise caution and prioritize your safety in the digital world. Even if the platforms we use fail to prioritize online safety.

Moving forward

Dealing with this form of fraud requires a two-pronged approach. Keeping up with the sheer number of duplicate accounts created on Facebook must be akin to digging a hole in a river. Trust Stamp’s unsupervised biometric model can be used to group accounts by face and start to eliminate the existing problem. Accounts containing the profile photos of the same person can be linked and investigated.

Simultaneously, accounts can be tied to biometrics to prevent more inauthentic profiles from being created. Technology like Trust Stamp’s Biometric Multi-Factor Authentication (MFA) can protect users by ensuring photos uploaded are of the account owner and not someone else.

What would this look like? When a user uploads their profile picture, they’re prompted to take a selfie and ensure it matches the photo being uploaded. Not only is biometric match assessed, but several checks are done in the background to ensure a legitimate, live person is present for the selfie.

Once authenticity is confirmed, Trust Stamp’s IT2 biometric token can be used for comparison as new photos are uploaded. If the tokens do not match, it indicates that the profile picture does not feature the person attempting to create the profile.

This technology could be a game-changer for the safety of social media users and prevent such prevalent catfishing scams. By ensuring that the profile picture matches the actual user, platforms can significantly reduce the incidence of fake profiles, thus protecting users, especially vulnerable groups like the elderly, from the emotional and financial harm caused by catfishing scams. Implementing such robust verification methods not only enhances user safety but also bolsters the credibility and trustworthiness of the platform itself.